I've recently been trying to consolidate my shader management code. I've been migrating Clayworks from fixed platform purgatory on DirectX to multi-platform nirvana using OpenGL/GLES2. I've wanted to do this for ages but other work always took priority. I'd laid the ground work fairly well by abstracting off a central graphics context class - not as a virtual interface but as a static interface layer between the system graphics library and my own code, with lots of helper functions built in for my particular needs and a small sub set of platform dependent code.

Implementing the OpenGL version took a lazy week of evening hacking while on holiday. It was quite satisfying to see Clayworks start up and know that it's nearly defenestrated. That's not to say that it won't be on windows, it's just that it won't be stuck there.

These days, OpenGL and DirectX are mostly just a thin layer of glue between application code and shaders. This may all change if ATI's Mantle gains traction (and I certainly want use it) but converting between one set of calls to create vertex buffers and another is just a bit of boilerplate work - it takes as long as it takes but it's hardly interesting or challenging.

The difference between shader languages is a little more involved. I have a set of GLSL shaders and HLSL shaders that do similar (but not quite the same) things. GLSL is a more streamlined language than HLSL (or nVidia's cg, which is really similar) and that its advantages, particularly for driver writers.

Unfortunately, there's a lot of inconsistency in quality and in my work on ExoMiner, I found it necessary to add functionality for including files (there's no #include in glsl) and also optimization (some mobile platforms just don't do it - I've been using the GLSL optimizer project). For the Clayworks OpenGL push, I wanted to take that a bit further.

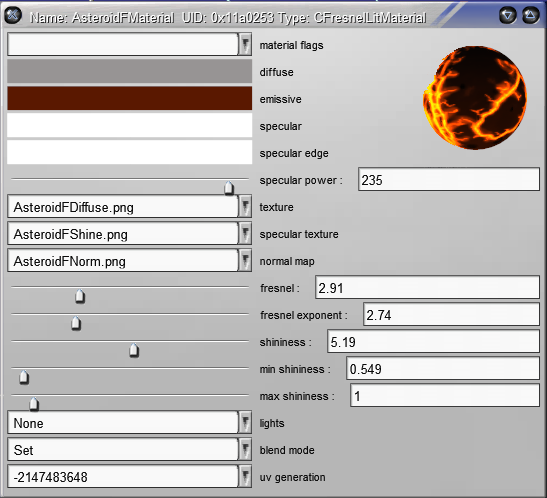

Clayworks (and ExoMiner) makes use of material objects for surface shading. These are objects that are shared between many instances that describe surface rendering properties. Previously, adding a new material in Clayworks was tedious: I'd have to create a new c++ class, add a bunch of properties and then manually match these up with shader constants. It's another one of those things I've been meaning to rewrite for a while - shaders and other scripts should make development more fluid but when you have to write hand crafted C++ boilerplate, it becomes slow and tedious.

I thought that this would be the ideal time to investigate ways of automating this process for Clayworks and Exominer (which uses a different engine). The fly in the ointment here is that the platform I'm converting from (DirectX/HLSL) has far better tools built in for this than the platform I'm converting to (OpenGL/GLSL) in the form of semantics and annotations - features that, for whatever reason, I haven't made use of until now.

As I mentioned before, GLSL is rather minimalist so if you want fancy features like annotations then you'd better write them yerself. I did google for prior work (and I'm sure people have done this sort of thing before, even if they aren't sharing) but the best I could come up with were some 10 year old discussions that ended acrimoniously.

Now created with less tedium - new shaders can be edited and their properties updated whilst the game or editor is running

Here's some example GLSL annotations. This won't compile in vanilla GLSL or in HLSL (thanks to the 'uniform', 'lowp' and 'vec4' keywords, which aren't part of GLSL.

uniform lowp vec4 material_diffuse : DIFFUSE < string Object = "Material"; string UIName = "Diffuse"; > = {1,1,1,1};

uniform lowp vec4 material_specular : SPECULAR < string Object = "Material"; string UIName = "Specular"; > = {1,1,1,1};

uniform lowp vec4 material_specular_edge : SPECULAR < string Object = "Material"; string UIName = "Specular Edge"; > = {1,1,1,1};

uniform lowp vec4 material_emissive : EMISSION < string Object = "Material"; string UIName = "Emission"; > = {0,0,0,0};

uniform lowp vec4 material_ambient : AMBIENT < string Object = "Material"; string UIName = "Ambient"; > = {1,1,1,1};

m lowp vec4 material_specular_edge : SPECULAR < string Object = "Material"; string UIName = "Specular Edge"; > = {1,1,1,1};

uniform lowp vec4 material_emissive : EMISSION < string Object = "Material"; string UIName = "Emission"; > = {0,0,0,0};

uniform lowp vec4 material_ambient : AMBIENT < string Object = "Material"; string UIName = "Ambient"; > = {1,1,1,1};

The default values are parsed and stored by the preprocessor and then stripped out rather than let the driver handle these. As mentioned here, some drivers do not implement these correctly.

I considered a few different options for annotations but decided that, seeing as it was a standard of sorts already, I'd try and implement the SAS system used in HLSL and cg. I should mention that at this point, my intention was to write the simplest piece of code I could to parse out annotations from the source and then spit out some nice, simple HLSL code. I did not intend (honestly now) to write a C style preprocessor, a full expression evaluator and a tight bit of code that can happily and efficiently make sense of and build an Abstract Syntax Tree out of just about any shader you care to throw at it. This is what we generally refer to as 'feature creep' but I swear to goodness that it was the devil in the details that made me do it.

In trying to parse the code, I found that it was necessary to understand various forms of type declaration, determine which object were arrays, what the size of those arrays was (which required me to write a full C style preprocessor as my shaders had some funky macros in them) and handle defaults (which some GLSL implementations fail to handle correctly, so best do them here and strip them out).

The now allows me to write SAS style annotations in GLSL code, which makes authoring shaders in Clayworks much easier. Whereas previously I had to write a lot of tedious boilerplate code, now it's just a little addition to the shader file and the material is ready to edit; it's even possible to edit the shader while the program is running and have that change the list of tweakable properties. All of the serialisation of material settings 'just works' automagically too, which is nice.

I didn't use flex/bison to do the AST construction (like I said, I was trying to 'keep it simple, stupid' and the output of flex ain't pretty) but it works and the code is quite clean. I may end up using the those tools eventually as every other shader parser I've seen (Angle, GLSL optimizer) makes use of them.

Which brings me to an observation - whilst a lot of this preprocessing is a production phase (products likely ship with optimized or even obfuscated and/or binary shaders), these shaders are being parsed around four times - once by my preprocessor, another by the optimizer, then (on windows) by Angle and finally by the driver itself. This seems a waste to me and I'd rather these disparate steps shared data structures. It'd be good to try and integrate these projects. I intend to open source this piece of work on github. I've made a repro here, although it's empty at the time of writing. I have to extract the code from my own libraries first.

The next step is to make the code parse full HLSL code and spit out GLSL, grabbing techniques and pass information as it does so. Clayworks does make extensive use of those features so rather than re-implement all those shaders, it'll be less work to write an interpreter for HLSL. That will have to wait for a little while though as I've achieved the original goals for this project and there are only so many hours in the day.

Please let me know if you're aware of any open source libraries with the same aims that already exist.